In this post we are going to explore how you can build your own on-device LLM-powered AI agent that leverages RAG (Retrieval-Augmented Generation) to correctly answer questions about the characters of The Wizarding World of Harry Potter. To do this, we are going to combine Ollama as our local inference engine, Gemma as our local LLM, our newly released RETSim ultra-fast near-duplicate text embeddings, and USearch for efficient indexing and retrieval. For those who want to jump right into the code the notebook is available on UniSim Github.

Thanks to Ollama, it is very easy to run local LLM when you want to quickly do experiments or don’t want to rely on a paid API. Those models which are significantly smaller in size, ~8 billions parameters vs ten of billions for the server ones, to be able to be run on commodity laptops have become incredibly good. For example, Gemma, our very own LLM, which received an update at Google I/O this week exhibits very strong performance across all benchmarks.

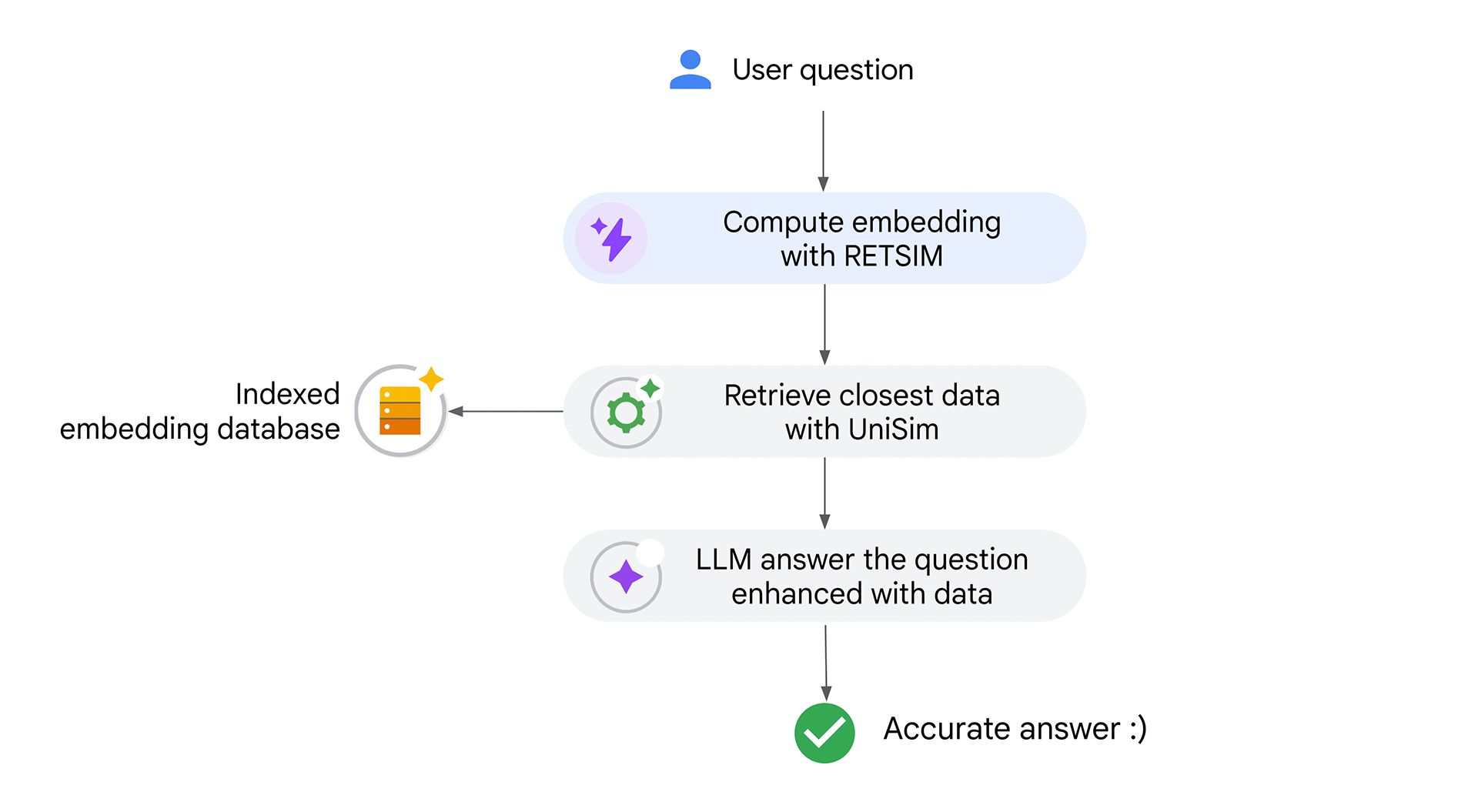

However, a smaller size means the model also knows and recalls less about the world and is therefore less capable to answer questions. Moreover, they are less capable at answering questions about your unique set of data that was obviously not part of their training data. The main way to address this data freshness issue is to perform what is called Retrieval-Augmented Generation aka RAG which, as visible in the diagram, is about combining the LLM capabilities with a retrieval system that allows for quickly looking up the data that is the most relevant to the user query and adding it to the LLM context so it can use it to answer the question.

Retrieval Augmented Generation (RAG) combine LLM reasoning capabilities with a data retrieval system to enhance LLM ability to accurately answer questions.

So far, most text embeddings used for RAG are focused on semantic similarity, not resilient to typos, and are fairly large (hundreds of millions of parameters), which makes them slow to use on-device and not optimized to look for exact or near-duplicate records. Over the last two years, with Marina, Owen, and the team, we have been working on developing a new type of text similarity embedding to address these shortcomings. The result is called RETSim (ICLR ‘24), which is a very small custom transformer model (less than 1M parameters) that significantly outperforms other embeddings for near-duplicate text similarity and retrieval.

This combination of speed and the ability to deal with typos makes RETSim the perfect embedding to combine with Gemma and Ollama for building a RAG pipeline that looks up data by consumers’ name, address or any other data where typos might appear. Recall here that 20-30% of user queries commonly exhibit typos so that is a problem that should not be overlooked.

To illustrate how to combine all those technologies into a working prototype, we are going to build an end-to-end RAG system to answer questions about the Harry Potter Wizarding World characters using the Kaggle’s characters in Harry Potter Books dataset. The notebook that contains all the steps discussed here is available on the UniSim Github which is the package under which RETSim is available.

Setup

First things first, we are installing the package we need Ollama to run Gemma locally, and UniSim to index data with RETSim and retrieve it with USearch.

!pip install -U tqdm Iprogress unisim ollama tabulateNext we perform a pre-flight check to make sure everything is properly working including downloading the latest Gemma version if needed.

# Making sure Gemma is installed with Ollama otherwise installing it

MODEL = 'gemma'

try:

ollama.show(MODEL)

except Exception as e:

print(f"can't find {MODEL}: {e} installing it")

ollama.pull(MODEL)

info = ollama.show(MODEL)

print(f"{MODEL.capitalize()} {info['details']['parameter_size']} loaded")We then check that UniSim/RETSim and Usearch are working correctly by testing the string similarity capabilities against typo which is also useful for many other applications such as deduplicating records and datasets cleanup.

VERBOSE = True # interactive demo so we want to see what happen

txtsim = unisim.TextSim(verbose=True)

# check it works as intendeds

sim_value = txtsim.similarity("Gemma", "Gemmaa")

if sim_value > 0.9:

print(f"Similarity {sim_value} - TextSim works as intended")

else:

print(f"Similarity {sim_value} - Something is very wrong with TextSim")Testing Questions

Before building RAG let’s evaluate how much Gemma knows about the Wizarding world by asking a few questions with increasing difficulty and let’s throw some typos into the mix to also see how it affects the model performance. I added type next to the question to express what type of test it is.

questions = [

{"q":'Which School is Harry Potter part of?', 'type': 'basic fact'},

{"q": 'Who is ermionne?', 'type': 'typo'},

{"q": 'What is Aberforth job?', 'type': 'harder fact'},

{"q": "what is dubldore job?", 'type': 'harder fact and typo'},

{"q": 'Which school is Nympadora from?', 'type': 'hard fact'},

]Direct Generation Answers

Let’s run those through Gemma via Ollama and see what type of answer we get.

print("[answers without retrieval]\n")

for q in questions:

a = q['direct'][:100].replace('\n', ' ')

print(f"Q:{q['q']}? (type: {q['type']})")

print(f"Direct answer: {a}..")

print("")Here are the answers we got from Gemma without any retrieval.

Which School is Harry Potter part of?? (type: basic fact)

Answer: Hogwarts School of Witchcraft and Wizardry is the school that Harry Potter attends…

This is not a great answer as Hogwarts is correct but we are missing Gryffindor.

Who is ermionne?? (type: typo)

Answer: Ermionne is a French fashion designer known for her colorful and playful designs, primarily focused …

Here the model is totally thrown off by the typo, because it doesn’t have enough context about the query. Adding to the prompt that the questions are specifically for Harry Potter would probably help (feel free to experiment with prompt tuning!)

What is Aberforth job?? (type: harder fact)

Answer: Aberforth is a fictional character in the Harry Potter series of books and films. He does not have a… Here the model is unable to answer because this requires very intimate knowledge of all the secondary characters of Harry Potter.

What is dubldore job?? (type: harder fact and typo)*

Answer: Dublador is a voice actor who provides voices for characters in animated films, television shows…

Here the model tried to correct the typo but got it wrong. RETSim will likely do better because it is explicitly trained to project common typos together in the embedding space. Same for the Hermione case, adding to the prompt that the questions are specifically for Harry Potter would probably help.

Which school is Nympadora from?? (type: hard fact)

Answer: Nympadora is a character from the book series “Harry Potter” and did not attend any school. She is a…

Same that for the Aberforth question the model knows Nymphadora Tonks is a character of the world but doesn’t have the knowledge needed to answer and came up with the wrong answer.

Indexing Harry Potter Characters Data

The first step to build our RAG pipeline to help the LLM with additional context is to load the data, compute the embeddings and index them. We are simply indexing the characters name using RETSim embedding and will return the data associated with it during the retrieval process to help the model.

raw_data = json.loads(open('data/harry_potter_characters.json').read())

CHARACTERS_INFO = {} # we are deduping the data using the name as key

for d in raw_data:

name = d['Name'].lower().strip()

CHARACTERS_INFO[name] = d

print(f'{len(CHARACTERS_INFO)} characters loaded from harry_potter_characters.json')

# indexing data with text sim

txtsim.reset_index() # clean up in case we run this cell multiple times

idx = txtsim.add(list(CHARACTERS_INFO.keys()))

Let’s quickly test our indexing to see if it works on one of my favorite characters, Newt Scamander, but with a typo in his name.

r = lookup("New Scamramber", verbose=True) # verbose to show all the matches

print('')

print('[best lookup result]')

print(f"name: {r[0]['Name']} / School: {r[0]['School']} / Profession: {r[0]['Profession']}")

print(f"Description: {r[0]['Descr']}")Query 0: "new scamramber"

Most similar matches:

idx is_match similarity text

----- ---------- ------------ -----------------------

1005 False 0.81 newt scamander

1006 False 0.71 newt scamander's mother

1172 False 0.63 sam

[best lookup result]

name: Newt Scamander / School: Hogwarts - Hufflepuff / Profession: Magizoologist

Description: Newton “Newt” Scamander is a famous magizoologist and author of Fantastic Beasts and Where To Find Them (PS5) as well as a number of other books. Now retired, Scamander lives in Dorset with his wife Porpentina (FB). He received the Order of Merlin, second…Results look great – RETSim embedding work as intended so we will for now just use the first results and pass the data to the LLM context before answering the user question.

RAG implementation

The RAG implementation is going to be in four steps:

-

Ask Gemma what is the name of the character so we can look it up. Given that we have access to a powerful LLM, using it to extract the named entity is I think the simplest and more robust way to do so

-

Retrieve the nearest match info from our UniSim index

-

Replace the name in the user query with the looked up name to fix the typo, which is very important and often overlooked, and then inject in the query the information we retrieve

-

Answer the user’s question and impress them with our extensive knowledge of the Wizarding world of Harry Potter!

This translates to this simple code, with helper functions defined earlier and available in the colab.

def rag(prompt: str, k: int = 5, threshold: float = 0.9, verbose: bool = False) -> str:

# normalizing the prompt

prompt = prompt.lower().strip()

# ask Gemma who is the character

char_prompt = f"In the following sentence: '{prompt}' who is the subject? reply only with name."

if verbose:

print(f"Char prompt: {char_prompt}")

character = generate(char_prompt)

if verbose:

print(f"Character: '{character}'")

# lookup the character

data = lookup(character, k=k, threshold=threshold, verbose=verbose)

# augmented prompt

# replace the name in the prompt with the one in the rag

prompt = prompt.replace(character.lower().strip(), data[0]['Name'].lower().strip())

aug_prompt = f"Using the following data: {data} answer the following question: '{prompt}'. Don't mention your sources - just the answer."

if verbose:

print(f"Augmented prompt: {aug_prompt}")

response = generate(aug_prompt)

return response

rag(questions[-1]['q'], verbose=True)RAG Answers

Let see our RAG in Acio, hum sorry in action and compare it to the directly generated answers we got before. Here are the answers we got from Gemma with retrieval:

Which School is Harry Potter part of?? (type: basic fact)

Direct Answer: Hogwarts School of Witchcraft and Wizardry is the school that Harry Potter attends…

RAG answer: Harry Potter is part of Hogwarts - Gryffindor.

Here the RAG answer is more precise it added Gryffindor which is the expected answer. The answer is also more concise and to the point.

Who is ermionne?? (type: typo)

Direct Answer: Ermionne is a French fashion designer known for her colorful and playful designs, primarily focused ..

RAG answer: Hermione Granger is a resourceful, principled, and brilliant witch known for her academic prowess an

Using the RAG information, Gemma figured out it was about Hermione from Harry Potter and correctly answered.

What is Aberforth job?? (type: harder fact)

Direct Answer: Aberforth is a fictional character in the Harry Potter series of books and films. He does not have a..

RAG answer: Aberforth was a barman.

The LLM is able to leverage the retrieved information and use it to correct its lack of knowledge which is the fundamental advantage of RAG. This technique allows us to enrich Gemma’s reasoning capabilities with personalized data to provide more accurate and useful answers.

What is dubldore job?? (type: harder fact and typo)

Direct Answer: Dublador is a voice actor who provides voices for characters in animated films, television shows..

RAG answer: Headmaster at Hogwarts School

Again RAG really helped with the context and quality of the Answer. RETSim embedding typo resilience worked perfectly, making it the perfect match with LLMs when building a RAG pipeline to lookup records and use them to answer typo-laden user queries.

Which school is Nympadora from?? (type: hard fact)

Direct Answer: Nympadora is a character from the book series “Harry Potter” and did not attend any school. She is a..

RAG answer: Hogwarts - Hufflepuff

With its retrieved data, Gemma is now a master at answering questions about the Wizarding Worlds despite its smaller parameter size than server side LLMs!

Conclusion

In conclusion, this short post has hopefully clearly highlighted why the retrieval augmented technique is critical when creating AI agents and in particular on-device ones that use smaller models that have less knowledge about the world. It also highlights why RETSim focuses on near-duplicate matching and speed instead of more traditional semantic text similarity embeddings, making it very useful for RAG and will hopefully inspire you to use it in your own pipeline. We are looking forward to seeing what you will build with Gemma, Ollama, Usearch and RETSim - please keep us posted by +1 us on social media.

Thank you for reading this post till the end! If you found this article useful, please take a moment to share it with people who might benefit. To be notified when my next post is online, follow me on Twitter, Facebook, or LinkedIn. You can also get the full posts directly in your inbox by subscribing to the mailing list or via RSS.

A bientôt 👋