This post looks at the main challenges that arise when training a classifier to combat fraud and abuse.

At a high level, what makes training a classifier to detect fraud and abuse unique is that it deals with data generated by an adversary that actively attempts to evade detection. Sucessfully training a classifier is such adversarial settings requires to overcome the following four challenges:

- Non stationarity Abuse-fighting is a non-stationary problem because attacks never stop evolving. This evolution renders past trainnig data obsolete and force classifiers to be retrained continiously to remain accurate.

- Lack of ground truth: Collecting accurate attack training data is very difficult because by nature attackers attempts to conceal their activities.

- Ambigious data taxonomy: Anti-abuse classifiers have to be very accurate despite dealing with data and taxonomy ambiguity. When you think about it even the well established concept of spam is ill defined.

- Lack of obvious features Last, but not least as defenders we have to defend all products which lead us to find way to apply AI to products that are not AI friendly because they lack rich content and features.

This post explore each of these challenges in turn.

This post is the second post of a series of four that is dedicated to provide a concise overview of how to harness AI to build robust anti-abuse protections. The first post explains why AI is key to build robust anti-defenses that keep up with user expectations and increasingly sophisticated attackers. Following the natural progression of building and launching an AI-based defense system, the third post examines classification issues, and the last post looks at how attackers go about attacking AI-based defenses.

This series of posts is modeled after the talk I gave at RSA 2018. Here is a re-recording of this talk:

You can also get the slides here.

Disclaimer: This series is meant to provide an overview for everyone interested in the subject of harnessing AI for anti-abuse defense, and it is a potential blueprint for those who are making the jump. Accordingly, this series focuses on providing a clear high-level summary, purposely avoiding delving into technical details. That being said, if you are an expert, I am sure you will find ideas and techniques that you haven’t heard about before, and hopefully you will be inspired to explore them further.

Let’s get started!

Non-stationary problem

Traditionally, when applying AI to a given problem, you are able to reuse the same data over and over again because the problem definition is stable. This is not the case when combating abuse because attacks never stop evolving. As a result to ensure that anti-abuse classifiers remain accurate, their training data need to be constantly refreshed to incorporate the latest type of attacks.

Let me give you a concrete example so it is clear what the difference between a stable problem and an unstable/non-stationary one is.

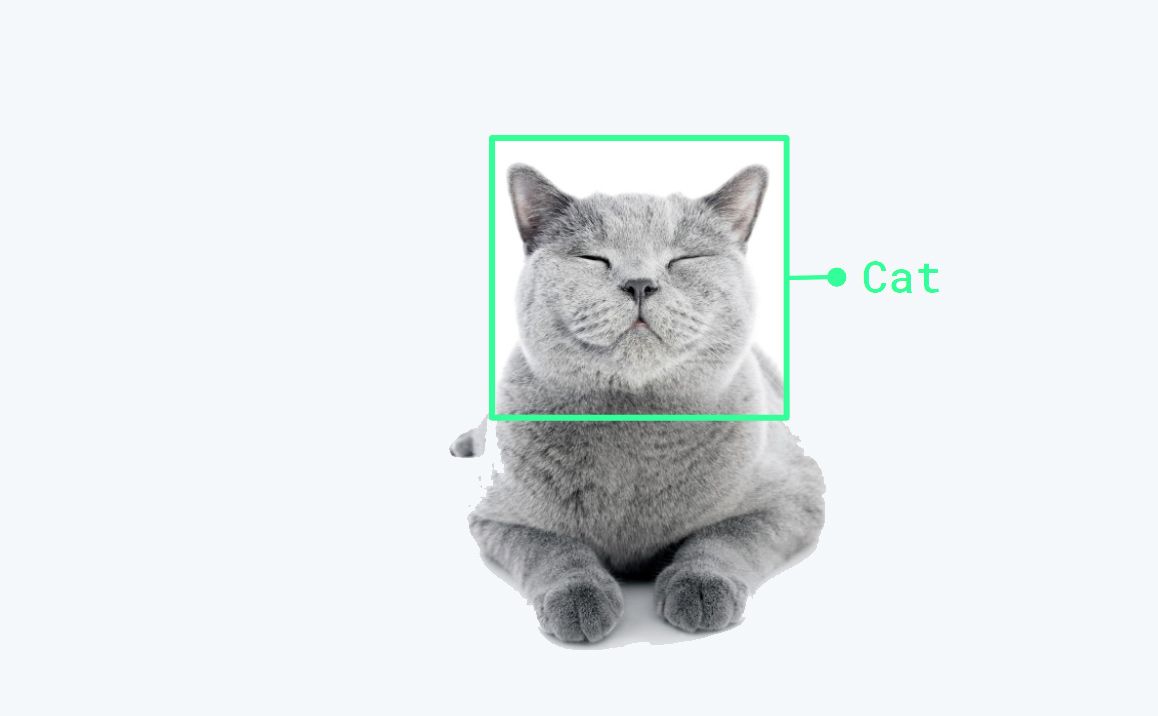

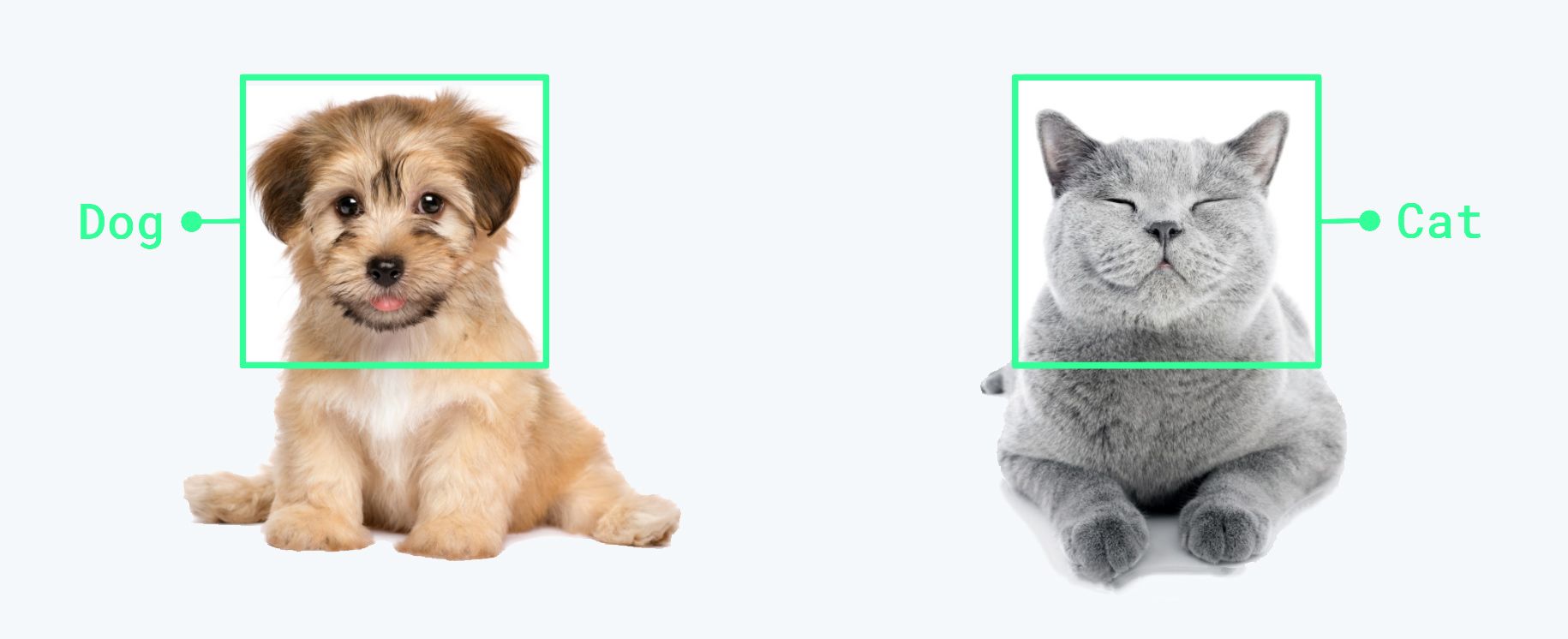

Let’s say you would like to create a classifier that recognizes cats and other animals. This is considered to be a stable problem because animals are expected to look roughly the same for the next few hundred years (barring a nuclear war). Accordingly, to train this type of classifier, you only need to collect and annotate animal images once at the beginning of the project.

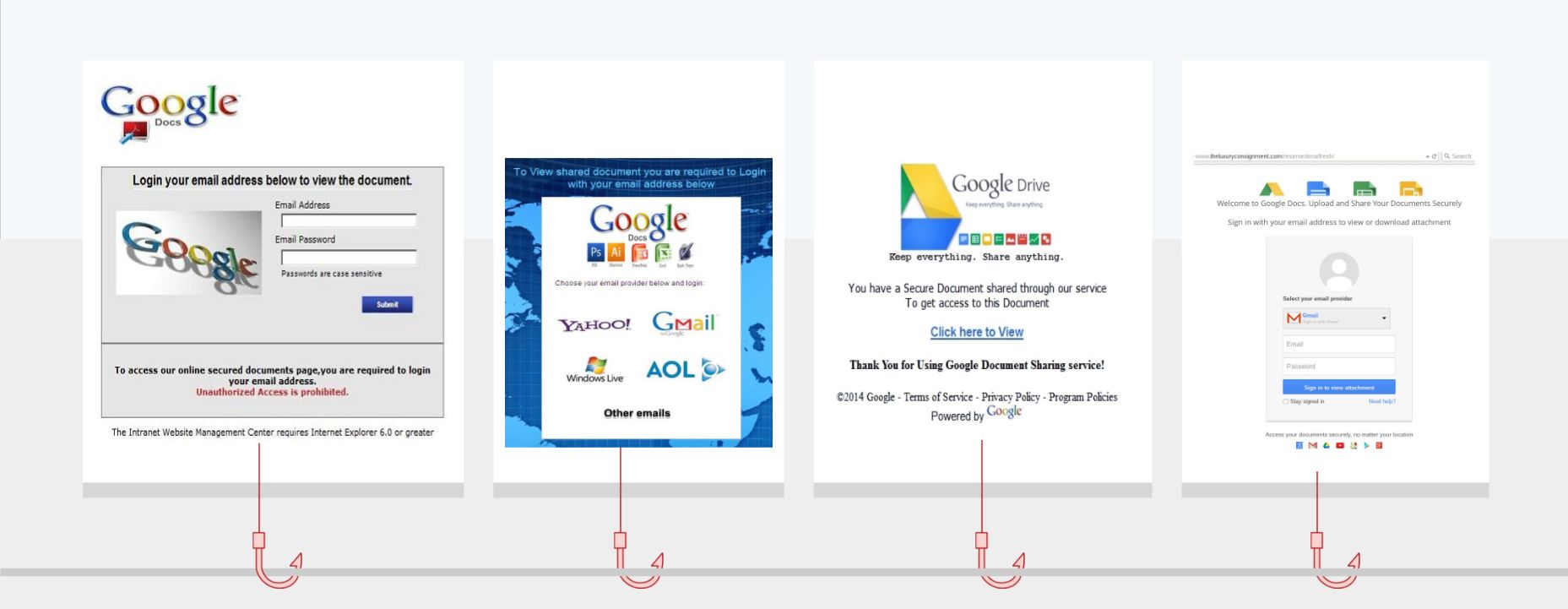

On the other hand, if you would like to train a classifier that recognizes phishing pages, this “collect once” approach doesn’t work because phishing pages keep evolving and look drastically different over time, as visible in the screenshot above.

More generally, while training classifiers to combat abuse, the first key challenge is that:

While there are no silver bullet to deal with this obsolescence, here are three complementary strategies that helps coping with ever-changing data.

1. Automate model retraining

You need to automate model retraining on fresh data so your model keeps up with the evolution of attacks. When you automate model retraining, it is a good practice to have a validation set that ensures the new model performs correctly and doesn’t introduce regressions. It is also useful to add hyperparameter optimization to your retraining process to maximize your model accuracy.

2. Build highly generalizable models

Your models have to be designed in a way that ensures they can generalize enough to detect new attacks. While ensuring that a model generalizes well is complex making sure you model have enough (but not too much) capacity (i.e., enough neurons) and quite a lot of training data is a good starting point.

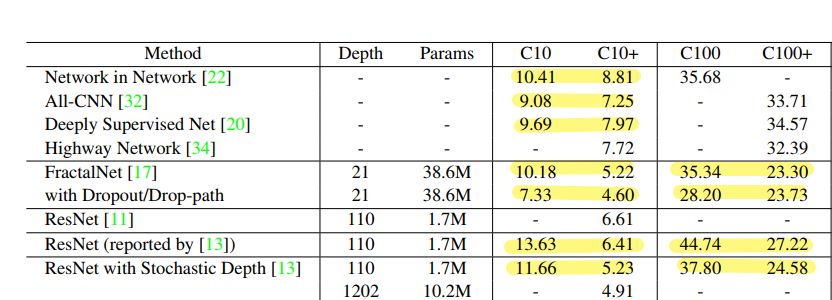

If you don’t have enough real attack examples, you can supplement your training data with data augmentation techniques that increase the size of your corpus by generating slight variation of your attack examples. As visible in the table above, taken from this paper, data augmentation make models more robust and do increase accuracy significantly.

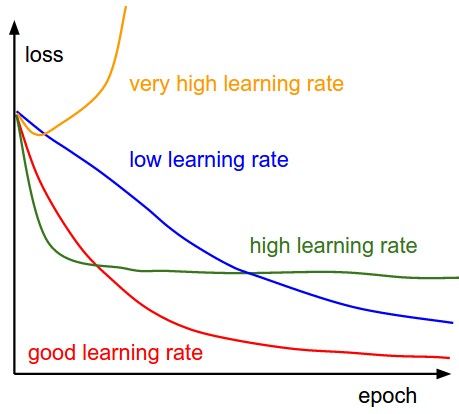

Finally you should consider other finer, well-documented technical aspects, such as tuning the learning rate and using dropout.

3. Set up monitoring and in-depth defense

Finally, you have to assume your model will be bypassed at some point, so you need to build defense in depth to mitigate this issue. You also need to set up monitoring that will alert you when this occurs. Monitoring for a drop in the number of detected attacks or a spike in user reports is a good starting point.

Gmail malicious attacks

Quite often, I get asked how quickly attacks are evolving in practice. While I don’t have a general answer, here is a key statistic that I hope will convince you that attackers indeed mutate their attack incredibly quickly: 97 percent of Gmail malicious attachments blocked today are different from the ones blocked yesterday.

Fortunately those new malicious attachments are variations of recent attacks and therefore can be blocked by systems that generalize well and are trained regularly.

Lack of ground truth data

For most classification tasks, collecting training data is fairly easy because you can leverage human expertise. For example, if you want to build an animal classifier, you could ask people to take a picture of animals and tell you which animals are in it.

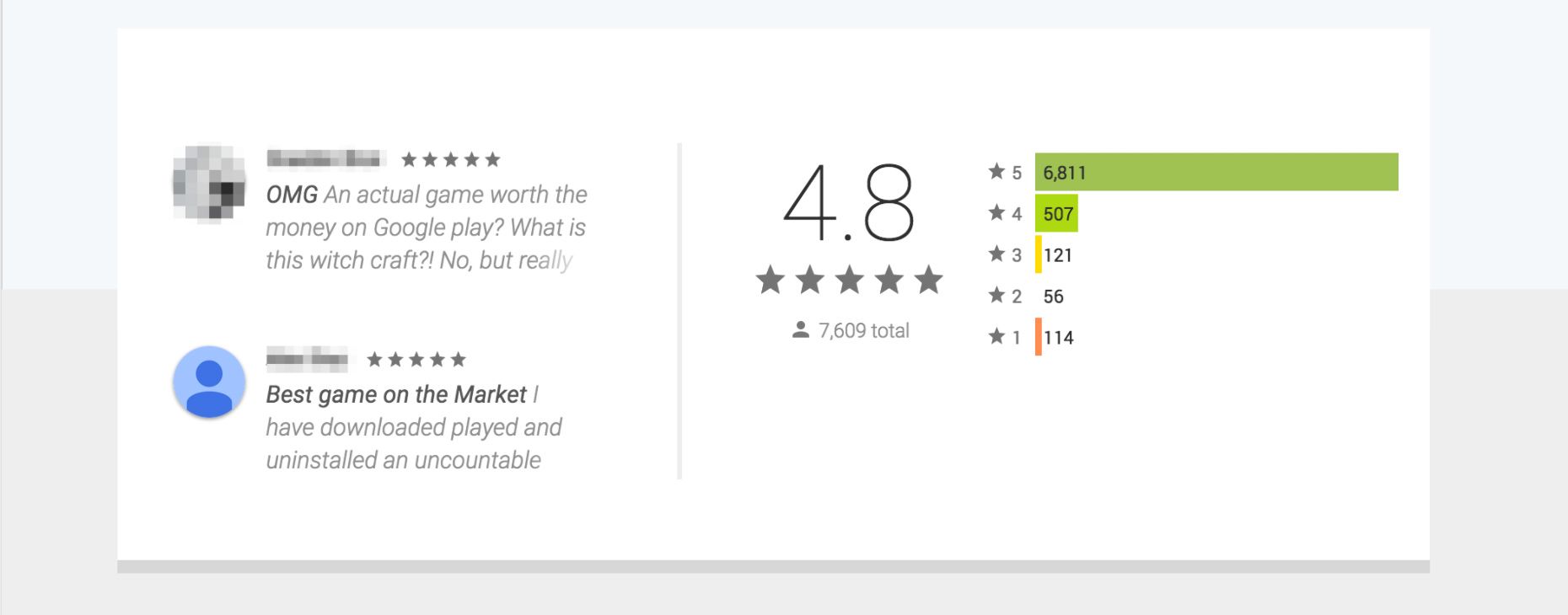

On the other hand, collecting ground truth (training data) for anti-abuse purposes is not that easy because bad actors try very hard to impersonate real users. As a result, it is very hard even for humans to tease apart what is real and what is fake. For instance, the screenshot above showcases two Play store reviews. Would you be able to tell me which one is real and which one is fake?

Obviously telling them apart is impossible because they are both well written and over the top. This struggle to collect abusive content accurately exists all across the board whether it is for reviews, comments, fake accounts or network attacks. By the way, both reviews are real in case you were wondering.☺️

Accordingly, the second challenge on the quest to train a successful classifier is that:

While no definitive answers exist on how to overcome this challenge, here are three techniques to collect ground truth data that can help alleviate the issue.

1. Applying clustering methods

First, you can leverage clustering methods to expand upon known abusive content to find more of it. It is often hard to find the right balance while doing so because if you are clustering too much, you end up flagging good content as bad, and if you don’t cluster enough, you won’t collect enough data.

2. Collecting ground truth with honeypots

Honeypots-controlled settings ensure you that they will only collect attacks. The main difficulty with honeypots is to make sure that the collected data is representative of the set of the attacks experienced by production systems. Overall, honeypots are very valuable, but it takes a significant investment to get them to collect meaningful attacks.

3. Leverage generative adversarial networks

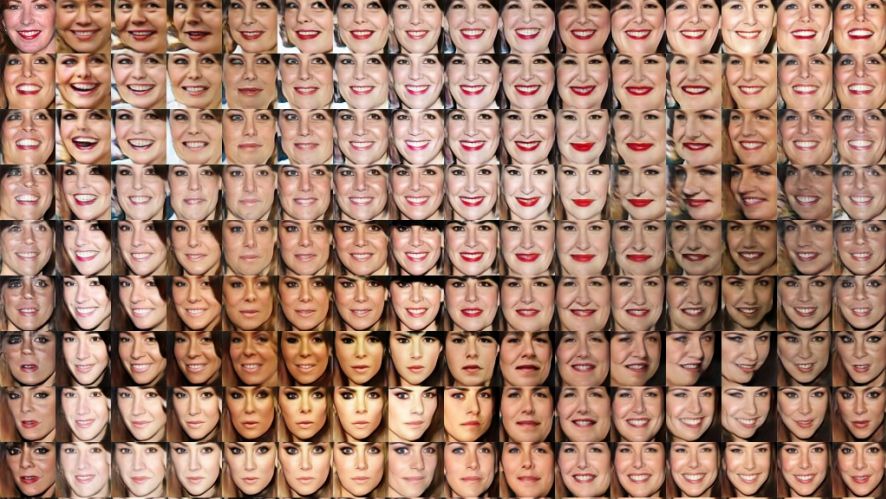

A new and promising direction is to leverage the recent advance in machine learning and use a Generative Adversarial Network (main paper), better known as GAN, to reliably increase your training dataset. The screenshot above, taken from this paper, show you an example of face generation using it: only the top left image is real. While still very experimental, here is one of the last paper on the topic, this approach is exciting as it paves the way to generate meaningful attack variations at scale.

Ambiguous data & taxonomy

The third challenge that arises when building a classifier is that what we consider bad is often ill defined, and there are a lot of borderline cases where even humans struggle to make a decision.

For example, the sentence “I am going to kill you” can either be viewed as the sign of a healthy competition if you are playing a video game with your buddies or it can be a threat if it is used in a serious argument. More generally, it is important to realize that:

Accordingly, is it impossible, except for very specific use cases such as profanity or gibberish detection, to build universal classifiers that will work across all products and for all users.

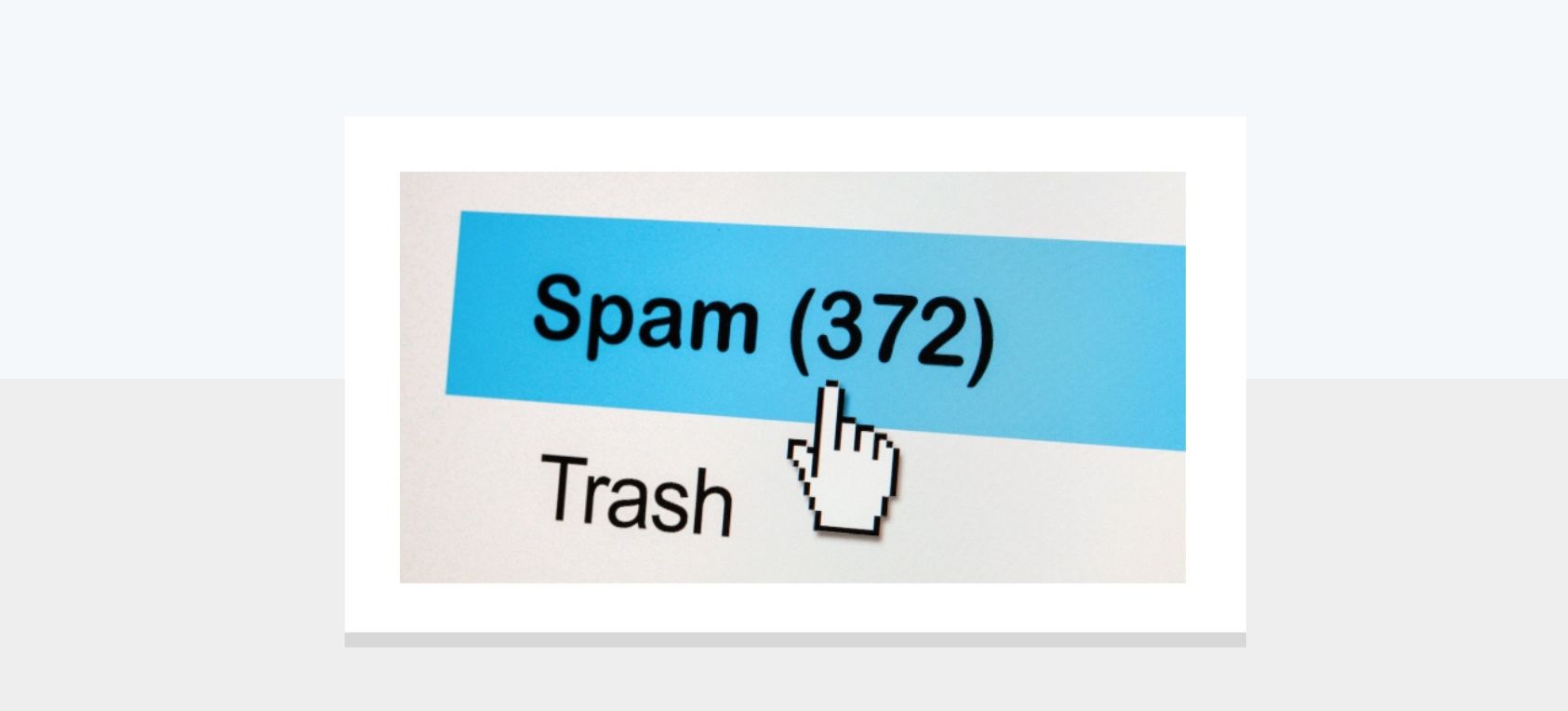

When you think about it, even the well-established concept of SPAM is ill defined and means different things for different people. For example, countless Gmail users decide that the emails coming from a mailing list they willingly subscribed to a long time ago are now spam because they lost interest in the topic.

Here are three way to help your classifier deal with ambiguity:

-

Model context, culture and settings: Easier said than done! Add features that represent the context in which the classification is performed. This will ensure that the classifier is able to reach a different decision when the same data is used in different settings.

-

Use personalized models: Your models need to be architectured in a way that takes into account user interests and levels of tolerance. This can be done by adding some features (pioneer paper) that model user behavior.

-

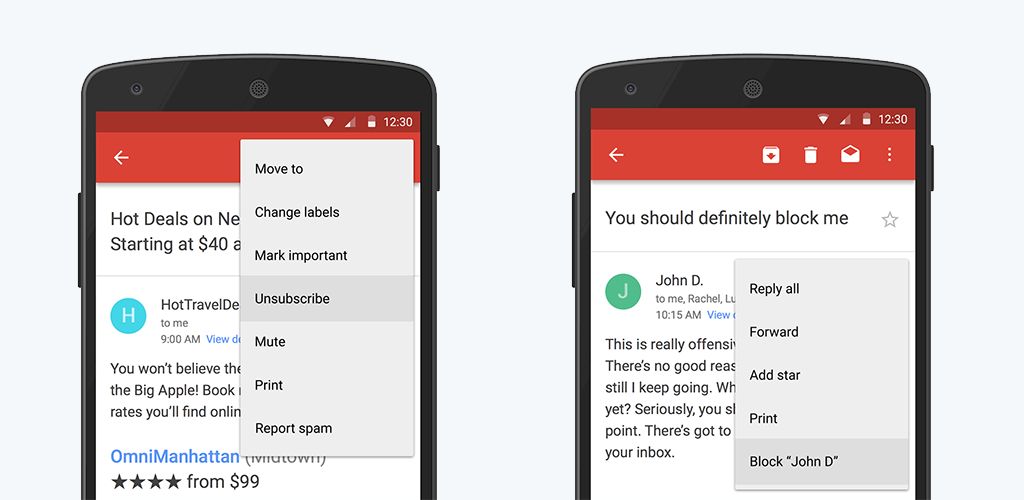

Offer users additional meaningful choices: You can reduce ambiguity by providing users with alternative choices that are more meaningful than a generic reporting mechanism. Those more precise choices reduce ambiguity by reducing the number of use cases that are clamped behind a single ill-defined concept, such as spam.

Here is a concrete example of how the addition of meaningful choices reduces ambiguity. Back in 2015, Gmail started offering its users the ability to easily unsubscribe from mailing lists and block senders, giving them more control over their inboxes. Under the hood, this new options helps the classifiers as they reduce the ambiguity of what is marked as spam.

Lack of obvious features

Our fourth and last training challenge is that some products lack obvious features. Until now, we have focused on classifying rich content such as text, binary and image, but not every product has such rich content.

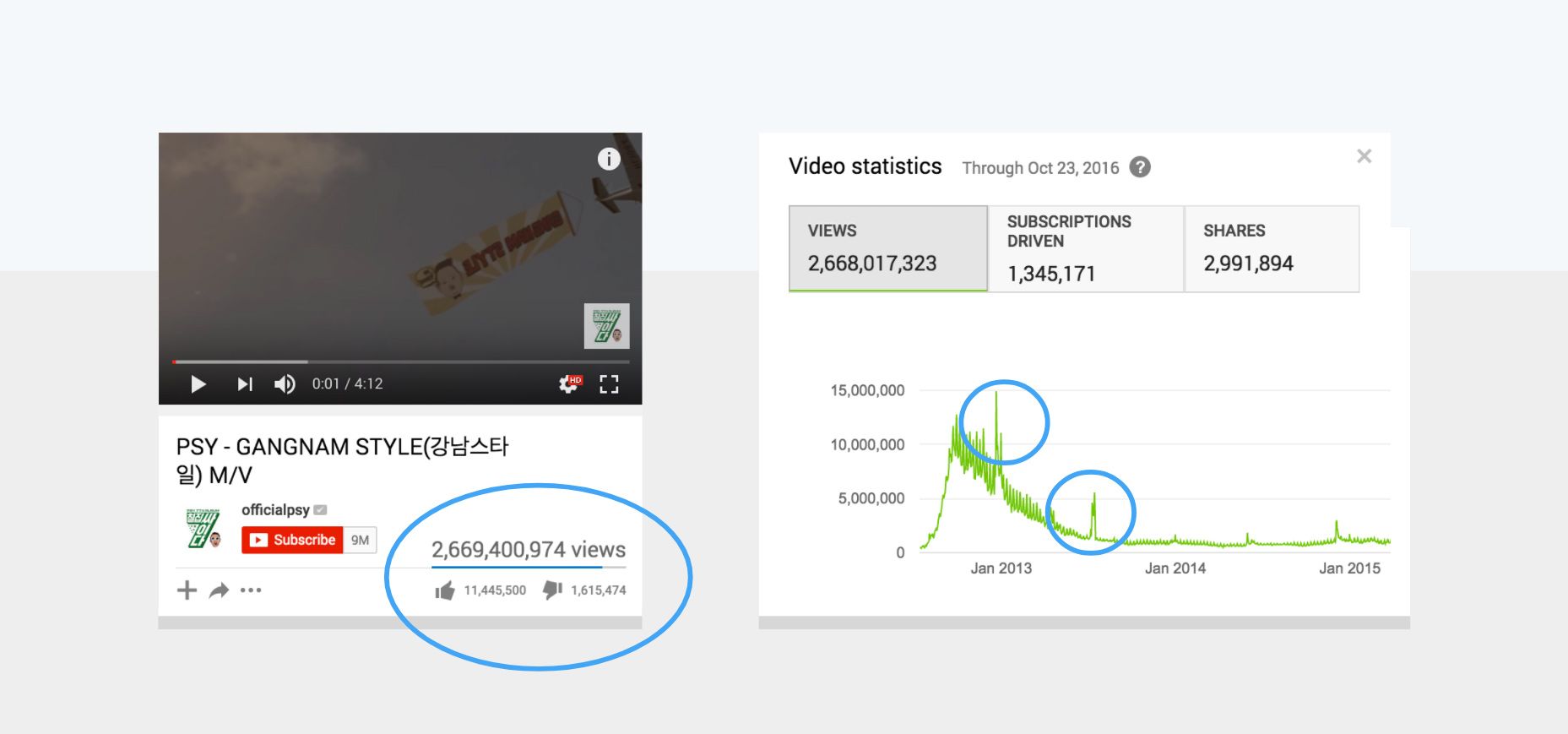

For example, Youtube has to be defended against fake views, and not a lot of obvious features that can be leveraged to do so. Looking at the view count timeline for the famous Gangnam style video, you will notice two anomalous peaks. These might be from spammers or simply because the video had huge spikes due to virality. It is impossible to tell by just looking at how the view count grew over time.

In general, AI thrives on feature-rich problems such as text or image classification; however, abuse fighters have to make AI work across the board to protect all users and products. This need to cover the entire attack surface led us to use AI to tackle use-cases that are less and ideal, and sooner or later we have to face a hard truth:

Fortunately, you can (partially) work around the lack of rich features. In a nutshell, the way to build an accurate classifier when you don’t have enough content features is to leverage auxiliary data as much as possible. Here are three key sources of auxiliary data you can potentially use:

-

Context: Everything related to the client software or network can be used, including the user agent, the client IP address and the screen resolution.

-

Temporal behavior: Instead of looking at an event in isolation, you can model the sequence of actions that is generated by each user. You can also look at the sequence of actions that target a specific artifact, such as a given video. Those temporal sequences provide a rich set of statistical features.

-

Anomaly detection: It is impossible for an attacker to fully behave like a normal user, so anomaly features can almost always be used to boost detection accuracy.

The last point is not as obvious as it seems so let’s deep dive into it.

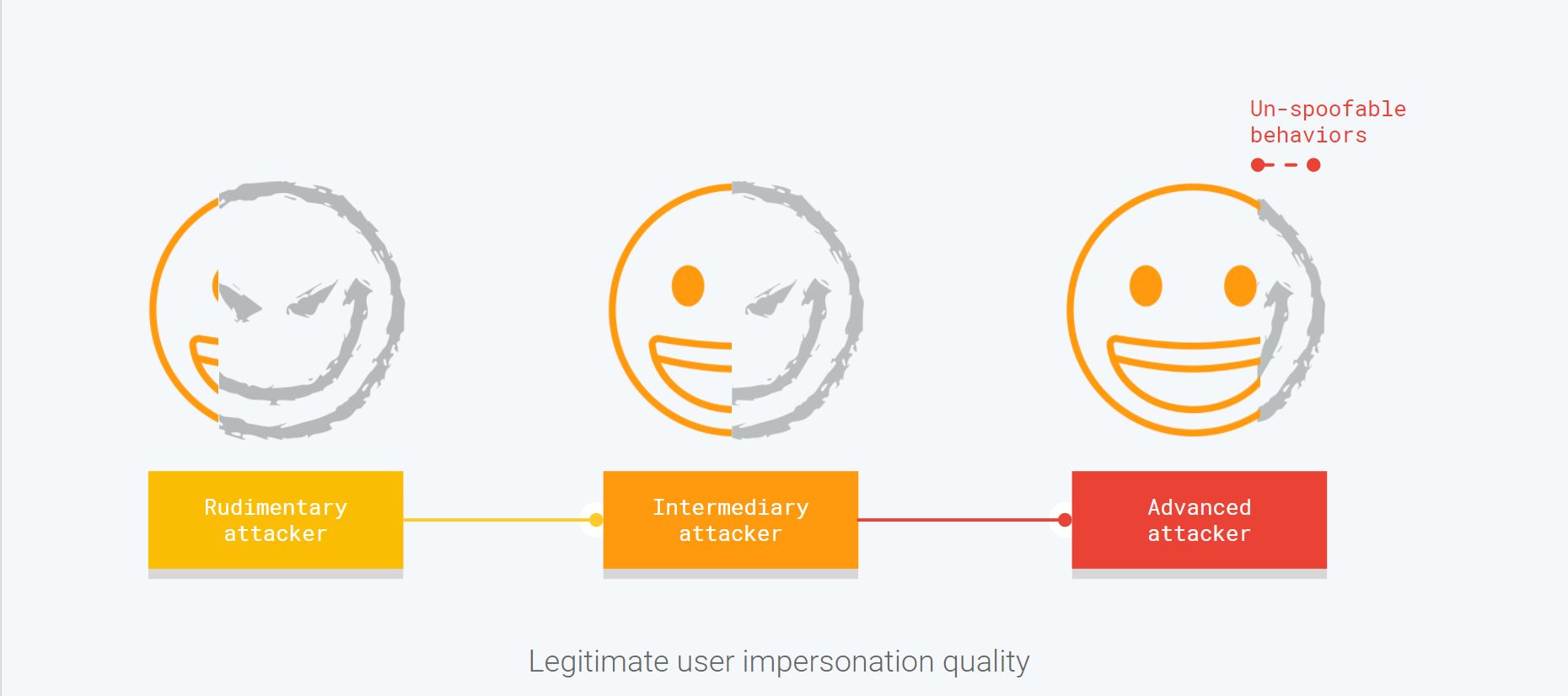

At its core, what separates rudimentary attackers from advanced ones is their ability to accurately impersonate legitimate user behavior. However, because attackers aim at gaming the system, there always will be some behaviors that they can’t spoof.

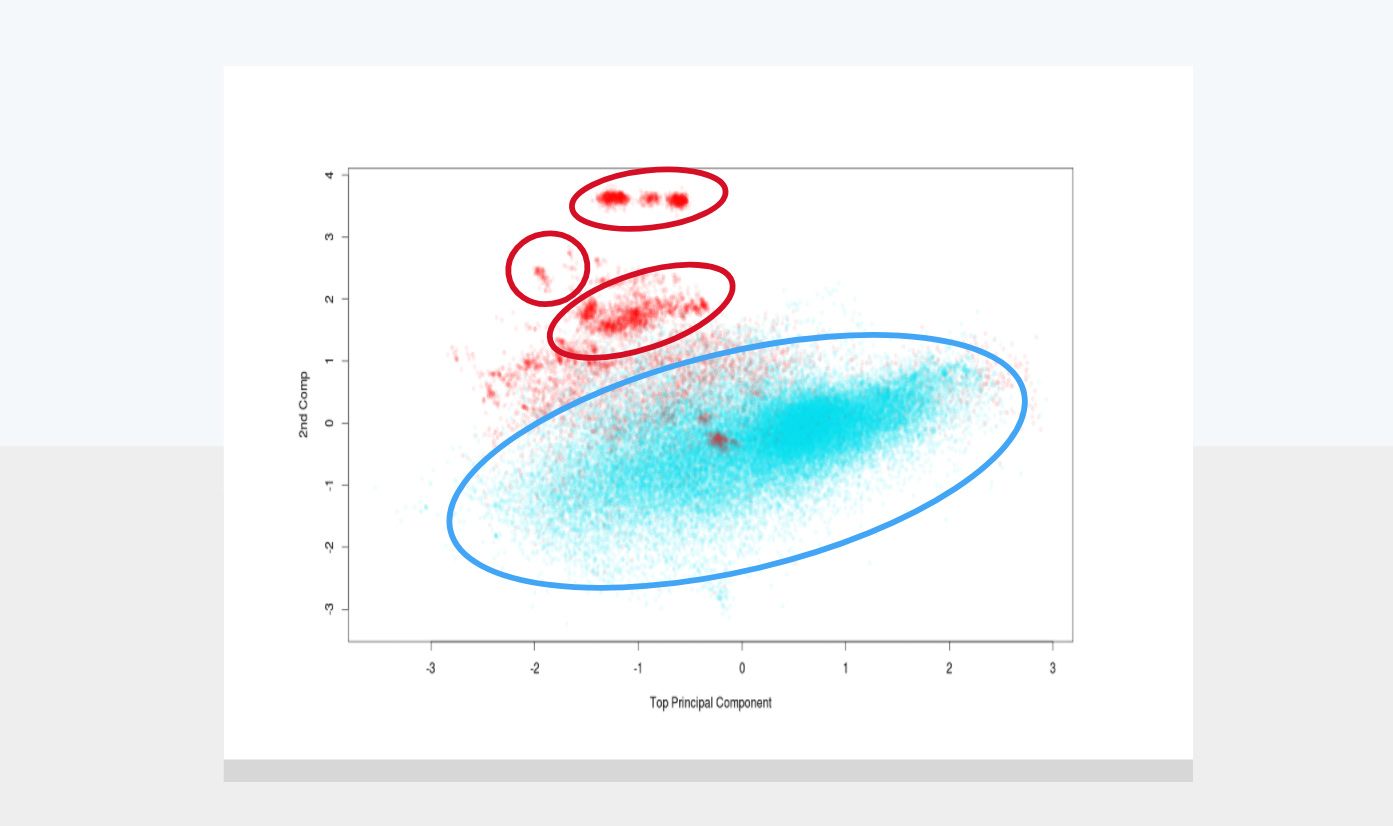

It is those unspoofable behaviors that we aim at detecting using one-class classification. Introduced circa 1996, the idea behind one-class classification is to use AI to find all the entities belonging to a single class (the normal behavior in our case) out of all entities that exist in a dataset. Every entity that is not member of that class is then considered an outlier.

For abuse purposes, one-class classification allows to detect anomaly/potential attacks even when you have no attack examples. For example, the figure above shows in red a set of malicious IPs attacking Google products that were detected using this type of approach.

Overall, one-class classification is a great complement to more traditional AI systems as its requirements are fundamentally different. As mentioned earlier, you can even take this one step further and feed the result of your one-class classifier to a standard one (binary class) to boost its accuracy.

This wraps up our deep dive into the challenges faced while training an anti-abuse classifier. The next post covers the challenges that arise when you start running your classifier in production. The final post of the serie discusses the various attacks against classifiers and how to mitigate them

Thank you for reading this post till the end! If you enjoyed it, don’t forget to share it on your favorite social network so that your friends and colleagues can enjoy it too and learn about AI and anti-abuse.

To get notified when my next post is online, follow me on Twitter, Facebook, Google+, or LinkedIn. You can also get the full posts directly in your inbox by subscribing to the mailing list or via RSS.

A bientôt!